Although data centers can now pack more processing power into less real estate, high-density computing environments can be a huge drain on operating budgets for several reasons: 1) expanding power demands, 2) increasing power costs, and 3) excessive heat.

If you manage a data center, or engineer the architecture for clients who do, you know how critical these issues have become. It's a challenge to conserve energy while supporting these growing loads - without bringing unwanted governmental scrutiny or surcharges for being an energy hog.

The good news is that even a small data center can save tens of thousands of dollars simply through wise choices in management practices, IT hardware, power, and cooling infrastructure. For example, the 3-year utility savings from an energy-efficient server can nearly equal the cost of the server itself. Couple this strategy with energy-efficient power and cooling systems, and a midsized data center with 1,500 servers could save millions of dollars while reducing the organization's carbon footprint.

Sound good? It ought to. If the idea of dramatically reducing energy costs in your data center intrigues you, read on to discover how to gain this edge for your organization.

1. Turn off idle IT equipment.

IT equipment is usually very lightly used relative to its capacity. Servers tend to be only 5- to 15-percent utilized, PCs are 10- to 20-percent utilized, direct-attached storage devices are 20- to 40-percent utilized, and network storage is 60- to 80-percent utilized.

When any of these devices becomes idle, the equipment still consumes a significant portion of the power it would draw at maximum utilization. A typical x86 server consumes 30 to 40 percent of maximum power even when it's producing no work at all.

To fight this, identify underutilized pieces of equipment and power them down. If a system hosts only one rarely used application, there may be resistance to retiring it, but there may be more cost-effective ways to serve that niche.

Another strategy: Identify and remove "bloatware" (ineffective software that uses excessive CPU cycles). More efficient software helps reduce CPU cycles, which enables a platform to generate more real processing output for the same power input.

2. Virtualize servers and storage.

Applications are inefficiently deployed across multiple systems — a dedicated server and storage for each application — just to maintain lines of demarcation among applications. Each platform consumes nearly all of the power it would require at peak load, yet each is doing very little work for the money.

With virtualization, you can aggregate servers and storage onto a shared platform while maintaining strict segregation among operating systems, applications, data, and users. Most applications can run on separate "virtual machines" that, behind the scenes, are actually sharing the hardware with other applications. Virtualization dramatically improves hardware utilization and enables you to reduce the number of power-consuming servers and storage devices.

"It's reasonable to assume that virtualization will improve server use from an average of 10 to 20 percent for x86 machines to at least 50 to 60 percent in the next 3 to 5 years," wrote Rakesh Kumar in Important Power, Cooling, and Green IT Concerns.

Virtualization won't be the salvation for everyone. Your data center might have to be designed for periodic peak loads. In that case, having underutilized, idle hardware is par for the course. But, virtualization can bring great benefits for most data centers.

3. Consolidate servers, storage, and data centers.

At the server level, blade servers can really help drive consolidation because they provide higher-density computing for the power consumed (for a given amount of energy input, you get more processing output from a blade server because each blade shares common power supply, fans, networking, and storage with other blades in the same blade chassis).

Compared to traditional rack servers, blade servers can perform the same work for 20- to 40-percent less power. At 10 cents per kilowatt-hour for utility power, a data center with 1,000 servers could save up to $175,000 per year simply by using more blade servers.

A second opportunity exists in consolidating storage. While you watch storage volumes mount, you're presented with an exciting opportunity to reduce energy usage in two ways:

Tiered storage. The larger the disk drive and slower its operating speed, the more efficient its energy usage. Use high-speed drives only where necessary, and use drives with lower rates for applications that don't require instant response.

Consolidated storage. Since larger disk drives are more efficient, consider consolidating storage to improve utilization and warrant the use of those larger drives.

For example, if you replaced 44 mid-tier drives (885 terabytes in 146-Gb drives) with two high-end systems (934 terabytes in 146-Gb and 300-Gb drives), the data center could trim energy consumption for servers by 50 percent to save $130,000 per year.

If underutilized data centers could be consolidated in one location, the organization would reap great savings by sharing cooling and back-up systems to support loads - not to mention the obvious real estate savings.

4. Turn on the CPU's power-management feature.

More than 50 percent of the power required to run a server is used by the central processing unit (CPU). Chip manufacturers are developing more energy-efficient chipsets, and dual- or quad-core technologies are processing higher loads for less power. But, there are other options for reducing CPU power consumption.

Several CPUs have a power-management feature that optimizes power consumption by dynamically switching among multiple performance states (frequency and voltage combinations) based on CPU utilization — without having to reset the CPU.

When the CPU is operating at low utilization, the power-management feature minimizes wasted energy by dynamically ratcheting down processor power states (lower voltage and frequency) when peak performance isn't required. Adaptive power management reduces power consumption without compromising processing capability.

If the CPU operates near maximum capacity most of the time, this feature would offer little advantage, but it can produce significant savings when CPU utilization is variable. If a data center with 1,000 servers reduced CPU energy consumption by 20 percent, this translates into an annual savings of $175,000.

Many users have purchased servers with this CPU capability, but haven't enabled it. If you have the feature, turn it up. If you don't, consider it when making future server purchases.

5. Use IT equipment with high-efficiency power supplies.

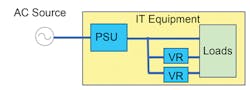

After the CPU, the second biggest culprit in power consumption is the power supply unit (PSU), which converts incoming alternating current (AC) power to direct current (DC) and requires about 25 percent of the server's power budget for that task. Third is the point-of-load (POL) voltage regulators (VRs) that convert the 12V DC into the various DC voltages required by loads such as processors and chipsets (see Figure 1). Overall server efficiency depends on the efficiency of the internal power supply and voltage regulation. The typical PSU operates at around 80-percent efficiency — often as low as 60 or 70 percent. In a standard server, with the PSU operating at 80-percent efficiency and voltage regulators operating at 75-percent efficiency, the server's overall power-conversion energy efficiency would be around 60 percent.

Several industry initiatives are improving the efficiency of server components. For example, ENERGY STAR® programs related to enterprise servers and data centers, and 80 PLUS®-certified power supplies are increasing the efficiency of IT equipment.

The industry really took note when Google presented a white paper at the Intel Developer Forum in September 2006, indicating it increased the energy efficiency of typical server power supplies to at least 90 percent, up from 60 to 70 percent.

The initial cost of such an efficient power supply unit is higher, but the energy savings quickly repay it. If the power supply unit operates at 90-percent efficiency and voltage regulators operate at 85-percent efficiency, the overall energy efficiency of the server would be greater than 75 percent. A data center with 1,000 servers could save $130,000 on its annual energy bill by making this change.

Most IT equipment isn't directly powered from the facility power source. Power typically passes through an uninterruptible power supply (UPS) for power assurance and power distribution units (PDUs) that distribute the power at the required voltage throughout racks and enclosures (see Figure 3).

PDUs typically operate at a high efficiency of 94 to 98 percent, so the efficiency of the power infrastructure is primarily dictated by power-conversion efficiency in the UPS. How much power does your UPS consume to do its job?

Advances in UPS technologies have greatly improved the efficiency of these systems. In the 1980s, most UPSs used silicon-controlled rectifier (SCR) technology to convert battery DC power to sinusoidal AC power. Products using this technology operated at a low switching frequency and were 75- to 80-percent efficient at best.

With the advent of new insulated-gate bipolar transistor (IGBT) switching devices in the 1990s, switching frequency increased, power-conversion losses decreased accordingly, and UPSs could run at 85- to 90-percent efficiency. When even higher-speed switches became available, there was no need for UPS solutions to include transformers, which helped boost efficiency to 90 to 94 percent.

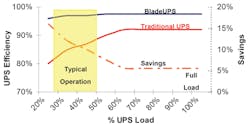

When evaluating a UPS, it's not enough to know the peak efficiency rating it can deliver at full load (the efficiency figure usually given). You're unlikely to be operating the UPS under full load. Since so many IT systems use dual power sources for redundancy, the typical data center loads its UPSs at less than 50-percent capacity, and, in some cases, at as little as 20 to 40 percent. You would expect efficiency to be lower when the UPS is operated at partial loads, but to what degree?

Previous-generation UPSs (before 1990) are markedly less efficient at low loads. Even most of today's UPSs are noticeably less efficient under the low loads typically expected of them (see Figure 2).

Even small increases in UPS efficiency can quickly translate into thousands of dollars. If the new UPS consumed even 10-percent less power than a legacy UPS, a data center with 1,000 servers could save more than $86,000. In addition to dramatic cost savings, high UPS efficiency extends battery runtime and produces cooler operating conditions within the UPS. Lower temperatures extend the life of components and increase overall reliability and performance.

7. Adopt power distribution at 208V/230V.

To satisfy global markets, virtually all IT equipment is rated to work with input power voltages ranging from 100V to 240V AC. The higher the voltage, the more efficiently the unit operates; however, most equipment is run off of lower-voltage power, sacrificing efficiency for tradition.

Just by using the right power cord, you could save money. An HP (Hewlett-Packard) ProLiant DL380 Generation 5 server, for example, operates at 82-percent efficiency at 120V, 84-percent efficiency at 208V, and 85-percent at 230V. You could gain that incremental advantage just by changing the input power (and the power distribution unit in the rack).

What about power distribution in the data center? Typically, the UPS operates at 480V, and a power distribution unit (PDU) steps down that power from 480V to 208V or 120V. If you could eliminate that step-down transformer in the PDU by distributing power at 400/230V and operating IT equipment at higher voltages (using technology available today), the power chain would be more efficient. Distributing power at 400/230V can be 3-percent more efficient in voltage transformation and 2-percent more efficient in the power supply in the IT equipment. This slight increase in efficiency is still worthwhile; a data center with 1,000 servers could save $40,000.

8. Adopt best practices for cooling.

As much as 30 to 60 percent of a data center's utility bill goes to support cooling systems; those figures are too high. Many computer room cooling systems are inefficiently deployed or aren't operated at recommended conditions.

Your organization might have some ready opportunities to reduce cooling costs through these best practices:

-

Use hot aisle/cold aisle enclosure configurations. By alternating equipment so there is an aisle with a cold air intake and another with hot air exhaust, you can create a more uniform air temperature.

-

Use blanking panels inside equipment enclosures so that air from hot aisles doesn't mix with air from cold aisles.

-

Seal cable outputs to minimize "bypass airflow," whereby cool air is short cycling back to cooling units instead of circulating evenly throughout the data center. This phenomenon affects as much as 60 percent of the cool-air supply in computer rooms.

-

Orient computer room air-conditioning units close to the enclosures and perpendicular to hot aisles to maximize cooling where it's needed most. Further optimization of cooling systems can be achieved by using air-handlers and chillers that use efficient technologies, such as variable frequency drives (VFDs), air- or water-side economizers, and humidity and temperature settings, according to American Society of Heating, Refrigerating, and Air-Conditioning Engineers (ASHRAE) guidelines.

Take the hypothetical data center with 1,000 servers: If this data center could trim 25 percent from its cooling costs, annual energy savings would be $109,000.

9. Conduct an energy audit of your data center.

Many data center managers don't know the efficiency of IT equipment or the site infrastructure - or have a clear vision for maintaining and improving that efficiency. There are lots of opportunities to reduce energy costs and become greener that are overlooked.

How much of the data center's power budget goes to IT systems? How much goes to support systems? For every kilowatt-hour of power being fed to IT systems, how much real IT output do you get? The answers to these questions provide a picture of how much power is consumed for every unit of data-center productivity (Web pages served, transactions processed, or network traffic handled).

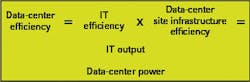

Data-center efficiency can be calculated as the ratio of two components:

In this equation:

-

IT efficiency is the total IT output of the data center divided by the total input power to IT equipment.

-

Site infrastructure efficiency is the total input power to IT equipment divided by the total power consumed by the data center.

-

IT output refers to the true output of the data center from an IT perspective, such as number of Web pages served or number of applications delivered.

In real terms, IT efficiency shows how efficiently the IT equipment delivers useful output for a given electrical power input. Site infrastructure efficiency shows how much power fuels that IT equipment, and how much is diverted into support systems (back-up power and cooling).

These figures enable you to track efficiency over time and reveal opportunities to maximize IT output while lowering input power, and to reduce losses and inefficiencies in support systems.

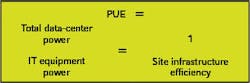

Although there are no industry benchmarks for IT efficiency, there are some industry benchmarks for site infrastructure efficiency. The Santa Fe, NM-based Uptime Institute recommends the Power Usage Effectiveness (PUE) ratio, where:

In this equation, total data-center power is the total power required to support all IT equipment, back-up power systems, and cooling systems. IT equipment power is the actual line cord power drawn by all IT equipment in the data center. A practical approximation for the IT equipment power would be the output power from UPSs.

After applying this calculation to several data centers, the Uptime Institute recommends an ideal PUE of 1.6 and a realistic PUE goal of 2 for a well-designed, well-operated data center.

10. Prioritize actions to reduce energy consumption.

As suggested in No. 9, auditing the energy efficiency of your data center will identify and prioritize opportunities to reduce energy consumption. You could improve energy efficiency by taking any of the actions mentions in this article:

-

Identifying and powering down underutilized equipment.

-

Increasing equipment utilization through virtualization and consolidation.

-

Selecting high-efficiency IT equipment.

-

Upgrading UPSs to higher-efficiency technology.

-

Implementing energy-efficient practices for cooling.

-

Adopting power distribution at 208V/230V.

-

In a greenfield data center, or in a major expansion/upgrade of an existing data center:

-

Get executive-level sponsorship and form a cross-functional team to develop an energy strategy for IT operations.

-

Include energy efficiency as a key requirement in design criteria alongside reliability and uptime.

-

Consider energy efficiency in calculations of total cost of ownership when selecting new IT, back-up power, and cooling equipment.

Chris Loeffler is project manager, data center solutions, at Raleigh, NC-based Eaton Powerware.